Participating in the challenge

Challenge Rules & FAQs

Please refer to our official challenge rules for all information relating to eligibility and participation in the AI for Industry Challenge.

For answers to more commons question, please refer to our FAQs at the bottom of the page.

Participate individually or as a team

To participate in the challenge, please complete and submit the registration form by April 17, 2026. After this date, we will not accept new applications for individuals or teams.

Teams

You can participate either individually or as part of a team. After registering for the challenge, you will have the opportunity to register your team name and invite members to join your team.

If you are participating individually, you will still need to enter a team name on the team registration form.

Team rules:

- Teams can consist of up to 10 participants

- You may only create or join one team. Being part of multiple teams is not allowed.

- All team members must be registered by the deadline to take part.

Each team will designate one team leader during the team registration process. The team leader can make updates to the team and is responsible for managing team submissions on behalf of the team.

Prizes

$180,000 in cash prizes

- First place team: $100,000

- Second place team: $40,000

- Third place team: $20,000

- Fourth and fifth place teams: $10,000

Prize distribution will be communicated to team leaders after registration.

How the challenge works

Task

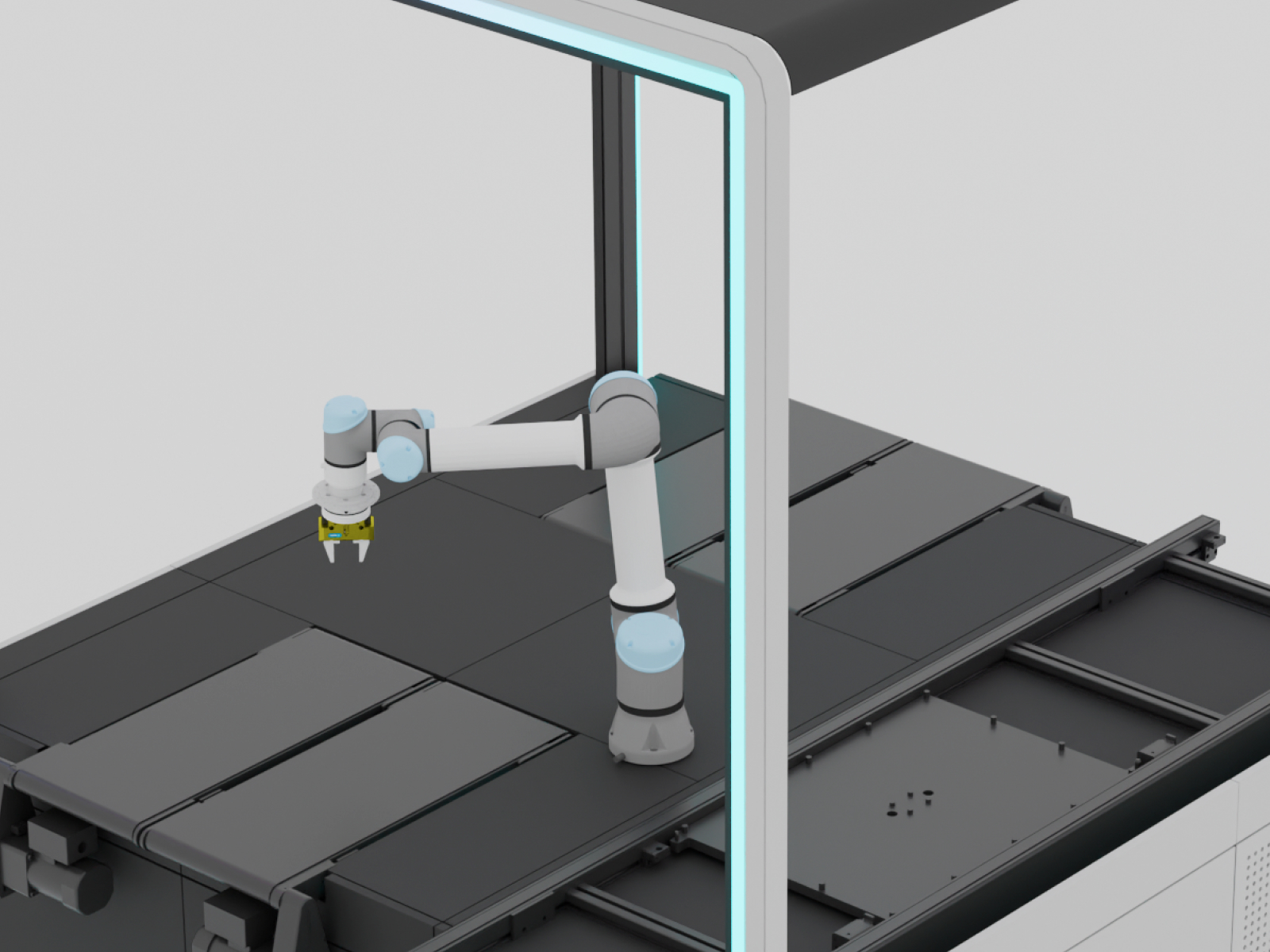

The AI for Industry Challenge invites participants to tackle complex dexterous manipulation tasks inspired by real-world assembly problems in electronics.

The core focus is the assembly and wiring of server trays, from the insertion of various connectors to complete cable handling.

Automating cable assembly and wiring is a notoriously difficult automation challenge due to the high mix and variance between cable types, the high cost of errors and the complex technical hurdles of modeling and manipulating deformable objects.

Timeline and phases

The challenge will officially begin on February 11 and run through July 2026. It has three phases:

- Qualification (~3 months): Participants will train and test their cable assembly models in simulation.

- Phase #1: (~1 month): Qualified teams will advance and gain access to Intrinsic Flowstate to develop a complete cable handling solution.

- Phase #2: (~1 month): Top teams will move on to deploy and refine their solutions on a physical workcell provided by Intrinsic for real-world testing and evaluation.

Participant toolkit

Each participant who registers will receive a toolkit as part of the challenge, which includes:

- Scene description*: Complete environment in SDFormat (.sdf).

- High-fidelity assets*: Robot (URDF/SDF), sensors (SDFormat), and environment models.

- Standardized ROS interfaces: Defined topics, services, and message types for sensors and commands.

- Reference controller & HAL: Baseline controller and hardware abstraction layer for simulated and real robots.

- Formal task description: Document outlining objectives, rules, and constraints.

- Baseline Gazebo environment: Fully configured simulation for reference and evaluation.

*Please note partner toolkits and formats may differ.

Intrinsic Flowstate & Intrinsic Vision Model (IVM)

Teams that qualify for phase #1 will gain access to Intrinsic Flowstate, our development environment. In Flowstate, you can integrate your trained model and leverage Intrinsic’s capabilities to build, debug, and validate your complete solution.

Participants will also receive access to the Intrinsic Vision Model (IVM)—our award-winning foundation model for a variety of perception tasks. IVM uses a growing set of specialized transformers to support advanced perception tasks.

Submissions

At each phase, teams must submit their models or solutions to be considered for advancement in the challenge and eligibility for prizes. Each team leader will receive a unique authentication token to upload submissions. Teams may make multiple submissions before the submission deadline.

Evaluation

Scoring across all three phases of the challenge will be automated and determined by a combination of the following evaluation criteria:

- Model validity: Submission must load without errors and generate valid robot commands on the required ROS topic. Invalid submissions will not be scored.

- Task success: A binary metric will be applied per successful cable insertion.

- Precision: Submissions will be scored based on how closely the connectors are inserted to their respective targets.

- Safety: Penalties will be applied for any collisions or for exerting excessive forces on connectors or cables.

- Efficiency: The overall cycle time to complete the entire set of assembly tasks will be measured, rewarding more efficient solutions.

Evaluation Committee

In addition to quantitative scoring, a panel of expert judges will review and evaluate final submissions based on criteria such as innovation, technical soundness, scalability, and alignment with the challenge mission.

Judges will be announced at a later date.

.jpg)